The standard error of a coefficient can be difficult to interpret, but in simple terms, it provides an idea for how precise the parameter estimate is.Īnother way to look at this concept of precision is with confidence intervals, which provide you with some idea of how sure you can be of the provided coefficient estimate. For multiple logistic regression, Prism reports two values that provide an idea as to the amount of error in the estimates of the provided parameter coefficients: standard error and profile likelihood confidence intervals. The average that you collect from that sample will have some error due to random variability in the subjects that you selected. However, since you can’t actually collect data from every person, you collect a sample. For example, if you wanted to know average human weight, you could (hypothetically), measure the weight of every single human and calculate the average. Similar to many other analyses (such as multiple linear regression), the only way to know the actual, true value of a given parameter is to collect information on the entire population. Since most people don’t intuitively think in terms of log odds, Prism also offers interpretation based on odds ratios. If we are talking about β2, we can say that for a 1 unit increase in X2, the log odds of Y increases by β2, when all the other X values are held constant. The beta coefficient estimates listed under "Parameter estimates" in the output have the following interpretation: The reason is that we have transformed Y to model the log odds. Interpreting parameter estimates for logistic regression is more complicated than for linear regression. An interpretation of the multiplicative change on the log odds in the form of odds ratiosĪdditional information on P values calculated for these parameter estimates is provided elsewhere.The parameter estimates' effects on the log odds (remember that log odds = β0 + β1 * X1 + β2 * X2 +.

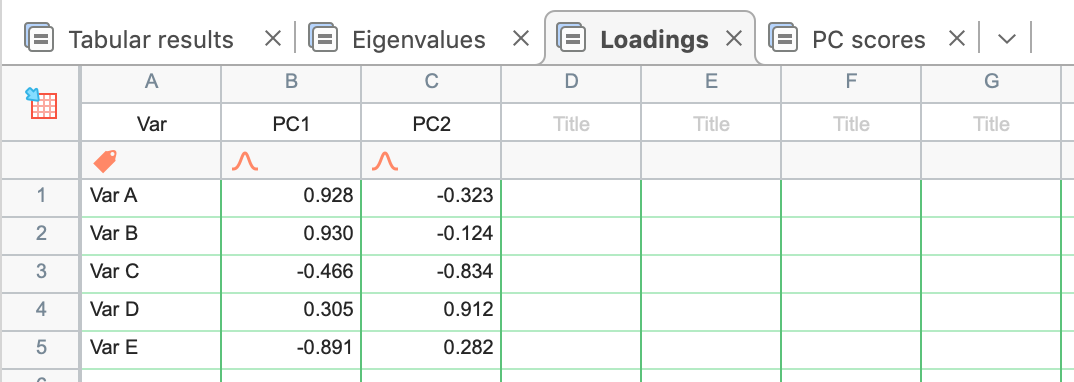

Prism reports the parameter estimates in two ways.

0 kommentar(er)

0 kommentar(er)